posted by Alex Mathews

The recent progress on image recognition and language modeling is making automatic description of image content a reality. However, stylized, non-factual aspects of the written description are missing from the current systems.

One such style is descriptions with emotions, which is commonplace in everyday communication, and influences decision-making and interpersonal relationships. We design a system to describe an image with emotions, and present a model that automatically generates captions with positive or negative sentiments. We propose a novel switching recurrent neural network with word-level regularization, which is able to produce emotional image captions using only 2000+ training sentences containing sentiments. We evaluate the captions with different automatic and crowd-sourcing metrics. Our model compares favourably in common quality metrics for image captioning. In 84.6% of cases the generated positive captions were judged as being at least as descriptive as the factual captions. Of these positive captions 88% were confirmed by the crowd-sourced workers as having the appropriate sentiment.

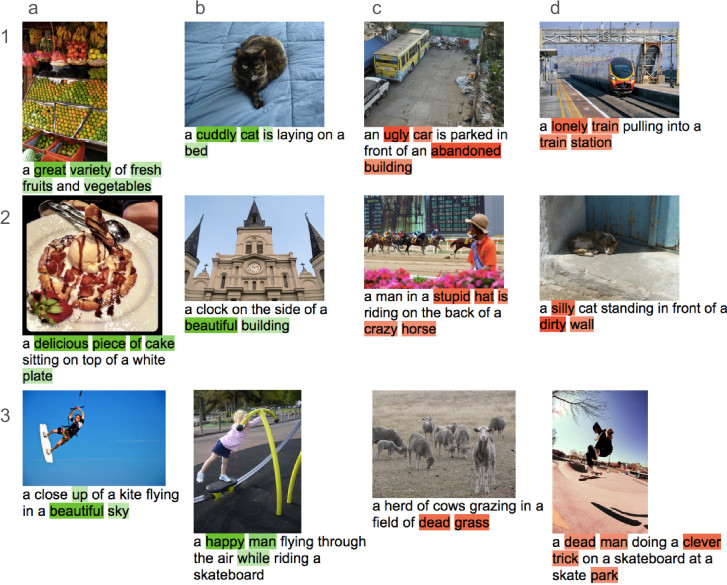

Sample Results

Examples of captions generated by SentiCap. The captions in columns a and b express a positive sentiment, while the captions in columns c and d express a negative sentiment. The coloring behind words indicates the weight given to the model trained on the sentiment dataset. The darker the coloring the higher the weight. See the paper for full details.

Resources

The paper is published in AAAI 2016 SentiCap: Generating Image Descriptions with Sentiments, by Alex Mathews, Lexing Xie, Xuming He. http://arxiv.org/abs/1510.01431

- A combined PDF of the paper and supplemental material is here.

- Example results: sentences with positive sentiment and negative sentiment.

- The SentiCap dataset collected from Amazon mTurk is here.

- The list of Adjective Noun Pairs (ANPs) is here

- The original code is here. It uses theano without neural network libraries (because at the time this was the best option).

- All training/test data and pre-trained models are made available here. To use, one will need to unzip both into the same directory and then follow the README to train/test the model. CNN fc7 features are read from disk – they are included with the data – so to use a new set of images, please extract features using your favorite CNN (code to do this is not included).