Posted by Yue Liu and Haojie Zhuang, with edits contributed by Lexing Xie.

Event Information

Hosted by the ANU Computational Media Lab in partnership with ANU’s Integrated AI Network, CSIRO NSF Project and Data61 Tech4HSE Research Program, this one‑day in‑person event brings together a distinguished group of researchers and practitioners to explore how artificial intelligence can address critical challenges in climate, environmental, and public and industrial safety. Detailed workshop Program is here https://events.humanitix.com/ai4good-2025

Themes & Focus

The event spotlights AI’s transformative potential across three interlinked domains:

Climate Resilience: leveraging predictive modeling and environmental data to support adaptation strategies;

Safety & Health: using AI to enhance regulatory compliance and training;

Environmental Monitoring: applying machine learning for ecosystem counting and assessment based on non-traditional data sources.

The Workshop was opened by Prof Tony Hosking, Director of School of Computing at the ANU. He shared with the 50+ participants that all of the grand challenges posted by the Computing Research Association in 2025 are related to AI.

Workshop Highlights

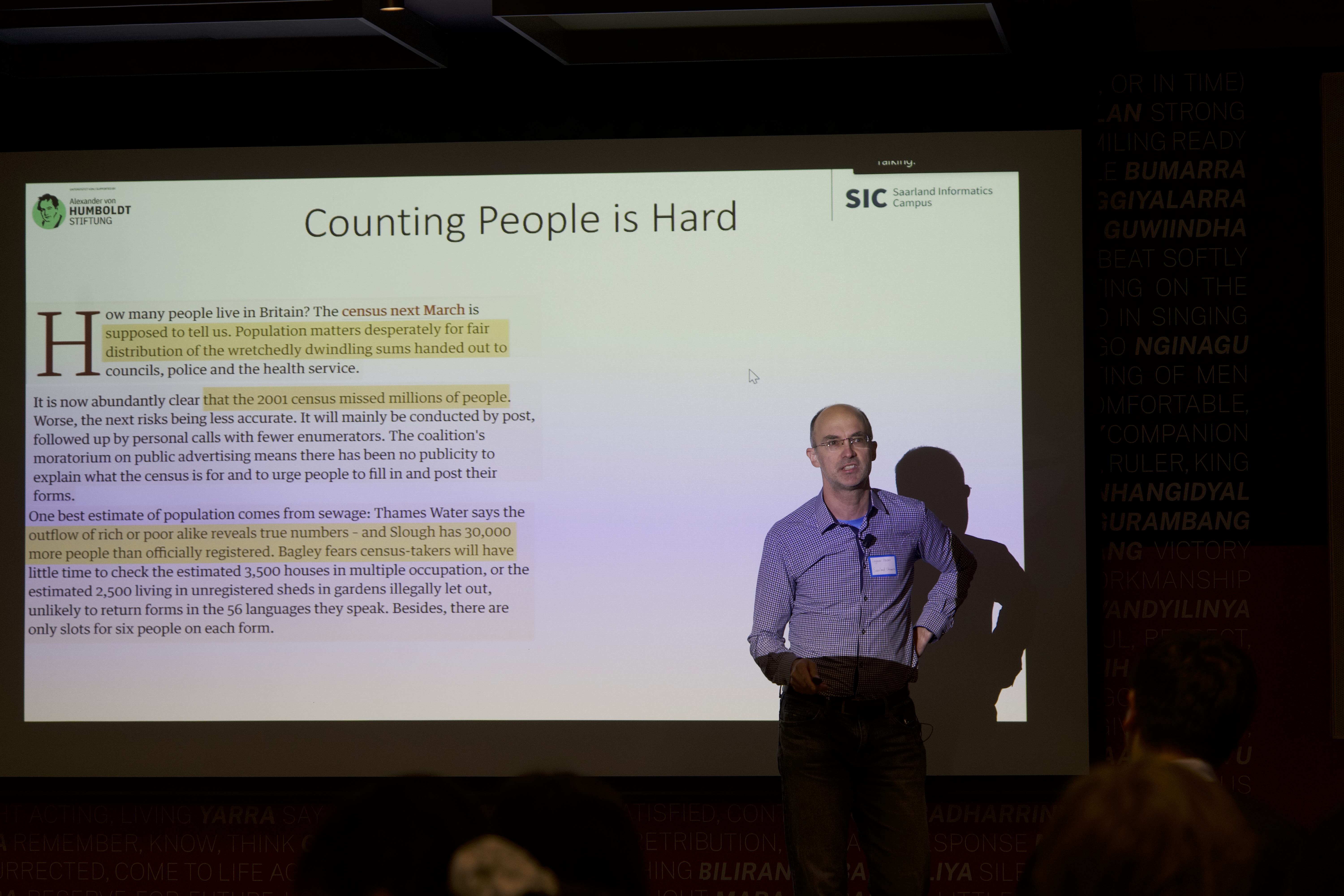

There were two keynote speeches that provided broad and inspiring context to the whole audience. One by Professor Ingmar Weber from Saarland University, who presented the audience with the challenge of measurements and counting at large scale. In particular, his work explored how high-resolution satellite imagery can illuminate societal dynamics such as migration, gender inequality, poverty, and public health. His thought-provoking talk sparked audience discussions on novel applications — from estimating water depth to exploring data sources beyond social media.

In the afternoon keynote, Professor Xuemei Bai (ANU Fenner School of Environment & Society) presented research challenges of urbanization under climate change, she discussed how cities can become transformative agents and help each other in the time of change. and asked how AI can help tackle these challenges faced by humanity. Her talk provided context for related junior researcher projects, including those by Dr Sombol Mokhles and Chenyi Du.

The first session after morning break provided overview to a number of active projects that underpins this workshop. Professor Lexing Xie addressed the information gaps between climate science, policy, and human decision-making. Professor Wenjie Zhang (UNSW) and Professor Jing Jiang showcased how LLM-based systems can support Health, Safety, and Environment (HSE) compliance assessment and training. Professor Liang Zheng demonstrated methods for translating multimodal data into code while preserving source characteristics, and Dr Xiwei Xu shared the commercialization pathway of CSIRO’s Tech4HSE project, highlighting collaboration between academia and industry.

The Early-career Researcher Spotlight featured diverse projects: Dr Yue Liu and Xiuyuan Yuan presented comparative analyses of complex documents, with case studies on Work Health Safety Regulations and the United Nations Framework Convention on Climate Change. Dr Haojie Zhuang discussed LLM-based assessment of practitioners’ HSE competence via natural language interaction. Ziyu Chen explored AI for classifying moral and human values, while Karen Zhou (University of Chicago) demonstrated an AI tool to help voters make informed decisions. Dr Jieshan Chen (CSIRO’s Data61) examined defenses against deceptive interfaces, and Dr Yonghui Liu shared design and coding approaches for web development.

After a short break, participants joined breakout sessions. One group examined challenges faced in their domains, while the other discussed what AI can — and cannot — address. The whole group later reconvened to share key takeaways:

AI is applied in diverse areas including remote sensing, environmental monitoring, regulation and policy analysis, and multimodal-to-code translation.

It is essential to identify target users and understand their goals, contexts, and constraints. Elicit user requirements, map them to the solution space, and ensure AI aligns with real user needs — not just technical potential.

The effectiveness of AI depends heavily on the quality, representativeness, and sources of training data; gaps or biases can reduce performance or introduce risks.

Natural language is inherently ambiguous, which can cause misinterpretation between humans and LLMs due to differing assumptions or knowledge.

AI is not always the optimal or necessary solution. In some cases, traditional methods (e.g., rule-based systems) may be more efficient, interpretable, or trustworthy, with AI serving as a supportive tool.

In addition, the workshop was preceded by a pre-event on 4 August, featuring a panel discussion and roundtable mentorship. Senior researchers shared insights on research directions, career pathways, and personal growth. Students valued the opportunity for constructive feedback and one-on-one conversations, gaining inspiration from the experiences and guidance of established scholars.

Acknowledgement

We thank all participants, speakers, and volunteers for their time, energy, and thoughtful contributions. We acknowledge and pay respect to the Ngunnawal and Ngambri people, the Traditional Custodians of the land on which this event was held.

Photo credit: Ziyu Chen